The Case for Scientific Failure

Piecemeal lessons from Karl Popper on the importance of falsification in science

By Natasha Mott

We’ve got a big problem in science: We’re not failing enough. Although it seems we hear about new cases of academic fraud or falsification of data on a daily basis—this isn’t failure. It’s a symptom of our pathological fear of failure, and the wrong kind of falsification.

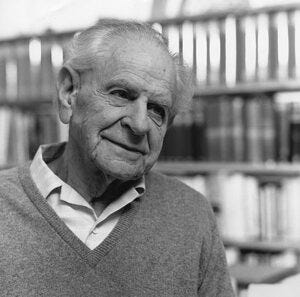

Scientific knowledge only succeeds when it survives rigorous attempts to kill it. This is the kind of falsification that leads to progress, according to one of the most influential philosophers of the 20th century, Karl Popper. However, we’ve designed a system that incentivizes the bad kind of falsification in a maligned attempt to obtain “positive” results. Instead of wagging our fingers at fraudulent researchers, perhaps we should reevaluate the incentives of our system across academia and industry alike and celebrate researchers who go to great lengths to rigorously refute spurious claims.

Indeed, for science to progress, we must be cognizant of ever-growing market pressures, scientific careerism and our unquenchable thirst for certainty that we believe only science can satisfy. We need to reward careful science, over hasty claims. More specifically, the quality of science might dramatically improve if grants were awarded to scientists for attempts at falsifying old theories rather than the discovery of new ones; if we published and celebrated evidence against popular theories instead of perceived confirmations; and if tenure-track positions that are supposed to allow for bold conjecture and daring refutations were awarded to scientists who refute their own findings rather than those who bolster established dogmas. Perhaps if we popped bottles of champagne every time a theory is refuted, we might ultimately realize more scientific success and have fewer stories about academic fraud and how entire scientific fields were built on vapor.

Ruling out ideas is a way of brushing up against truth, without having to prove it—a seemingly impossible task. A self-proclaimed “negativist,” Popper’s theory drastically changed how we conduct science by opposing the logical positivists of his time, who believed that science must be “verified” through positive observations. Popper hoped to solve what 18th-century philosopher and essayist David Hume called the problem of induction, which can be explained best by a simple example: Suppose you’ve only ever seen white swans. In this case, you might hypothesize that all swans are white, and to verify this you’d have to find every swan and confirm that it is, in fact, white. Alternatively, Popper suggests you don’t actually need to do that. If even a single nonwhite swan were found, it would falsify this hypothesis. While Popper’s solution is more of a workaround, this small difference in scientific focus makes a big difference not only theoretically, but also in the way we use resources to acquire information; the approach of tracking down every swan in the world might require substantially more resources and a different strategy from simply hunting a single nonwhite swan.

We spend a lot of money trying to obtain (and illustrate) positive findings rather than hunting bold refutations. In 2019, for instance, the pharmaceutical industry spent $83 billion on research and development, while the National Institutes of Health spent $39.2 billion. Industry resources are sharply pointed at problems with a high probability of positive if only incremental solutions. Drugs only have to be slightly different from their competitors to gain approval, and over 60% of drugs listed on the World Health Organization’s essential list are considered “me-too” drugs. And in novel therapeutic areas, we actively accelerate the process of drug development for products that don’t work, which can lead to the approval of drugs with marginal efficacy that will sit on the market for years with huge price tags to try and compensate for the cost of developing drugs that are clinically ineffective.

Companies allocate resources to studies that furnish positive, fruitful data for new products rather than working toward refuting their existing clinically ineffective products, because doing so would create self-competition in the market. Discovering a new drug candidate, however, is lucrative, especially when a company can outsource the identification of drug candidates to academic scientists, who do this work via public funding by way of grants. Publicly funded grants are geared toward basic science research with less direct market utility, and this allows for more exploratory research furnishing negative results. So far, so good. However, these grants require applicants to imagine the potential (positive) downstream impact of their results, which is chum in the water for companies that are hungry for blockbuster drugs, and then subsequent me-toos. A small tweak in grant application requirements could reframe the incentives of science where an applicant’s expected “impact” could be replacing clinically ineffective drugs which eat up billions of federal healthcare dollars by languishing on the market waiting for unsuspecting Medicare recipients to try them.

Securing public research grants requires that scientists convince seasoned experts that they have the track record and home institution to accomplish the aims of the grant, and of course, they only attain that track record and institution by publishing positive data prior to said grant. As a result, academic scientists spend the bulk of their most precious resource, time, hunting for novel, positive results to bolster a compelling grant application. Any refutation that happens is done quietly, unmentioned and behind the scenes, because securing funding is about showing off what works rather than what doesn’t. Scientists are forced to focus disproportionate energy on the short-term, “selling their science” to granting agencies, over rigorously evaluating their theories.

In our rush to sell positive results, we don’t celebrate failed theories as stepping stones, as Popper suggested we should. In “Conjectures and Refutations,” he said:

Refutations have often been regarded as establishing the failure of a scientist ... It should be stressed that this is an inductivist error. Every refutation should be regarded as a great success; not merely the success of the scientist who refuted the theory, but also of the scientist who created the refuted theory, and who thus in the first instance suggested, if only indirectly, refuting the experiment.

We don’t celebrate these failed theories because they remind us of the uncertainty of our pursuits and our own fallibility. This is why you won’t find champagne bottles popping in the lab when a theory is refuted. Instead, if a negative piece of data is obtained or a competitor publishes contrary results, you see grad students scurrying to get preliminary data for the next grant or publication so that they can “get there first”—even if only with shallow claims. Later, if those claims are refuted, projects and even entire labs may be abandoned in pursuit of something else that might “work.” Between grant writing, publishing, teaching and managing students, academic scientists are spread thin with little time to reflect on lessons from failed theories. As a biomedical scientist, I had little time to study even famous discredited scientific theories like phlogiston. It wasn’t until I left academia and started studying the philosophy of science that I learned how the failure of these theories provides extraordinary insight into how we do science.

Critique is seen as a nuisance and a reminder of our fallibility in contemporary science when it should be highly regarded as the cornerstone of the practice. Scholarly irritation with critique is represented by the well-known myth of “Reviewer Number 2.” The standing joke is that everyone hates Reviewer Number 2 because they provide the most challenging peer review, blocking the path to publication. It’s a good sign that we can joke, but we need to go further: We need to love our critiques. Popper modeled appreciation of critique in many ways, one of which was usually by beginning a scathing response to a critique with a kind word or two, and a palpable sense of gratitude for the thoughtful interest of his critic (even when he thought they had blatantly misinterpreted his argument). He carried this scrappy energy with him everywhere, as seen in one encounter with philosopher Dorothy Emmet, whereupon meeting her he launched an “attack” on her ideas and argued “until midnight” after which she recalls he seemed satisfied and became gentler and more affectionate. He didn’t appear to view his critics’ refutations as cumbersome obstacles that he should begrudgingly climb over, on his path to a positive confirmation of his theories. He appreciated their thoughts. He also didn’t appear to ascribe ill intent or career sabotage, as it has been suggested today in such cases where legal action was threatened against a well-known expert in scientific misconduct when she challenged the integrity of a COVID-19 treatment. Critique today leads scientists to assume bad faith on the part of their peers.

Our assumption of bad faith is, at least in part, baked into the careerism associated with becoming a professional scientist. The publication system and incentives promote quantity over quality leading to the churning out of trivial papers, and even the—not theories. “Publish or Perish” is a reality for scientists who must submit their work faster than the next lab. The peer review system is meant to prevent erroneous data and improve the accuracy of scientific literature, but lately, it isn’t working. The current publication and granting systems might incentivize bad data, or at least less than rigorous testing in favor of volume. Scientists hold their data close to the vest out of fear of being “scooped” by the competition because once the discovery they’ve been working on is published, a secondary paper on the subject doesn’t have nearly the same pizazz, even if the theory is more stringently tested the second time around. If the worst happens and a theory eventually leads to a dead-end where positive results stop coming, scientists must quickly pivot to an alternate problem. If the paper is important enough that down the road someone discovers erroneous data or a flaw, this kind of refutation of a previous theory might mean the retraction of a prior publication. That’s problematic, because publications are the currency of the realm, and a retraction is a debit that is not well tolerated—especially for a professor without tenure. When scientific competition for this type of currency is this steep, its utility is almost by definition questionable.

If scientists are required to be infallible to obtain tenure, tenure might not be serving one of its most important functions: fostering scientific progress by allowing for and testing bold theories without fear of losing one’s job. Obtaining a tenure-track position where you might eventually prove yourself and gain the ultimate job security requires a substantial publication record and preliminary grants, so early-career scientists who are more likely to take risks are incentivized to play it safe, writing perfunctory grants and satisfying a laundry list of bureaucratic tasks that will ensure they achieve the goal of getting tenure. We need to give young scientists room to be wrong in a big way, and they’re not going to do that when the future of their career hinges on them producing only positive findings.

Many of us have heard the argument that Einstein might not have fared well in today’s academic environment, which favors safe, assembly-line science and box-checking. Imagine, today, a 20-year-old kid out of college who can’t find a teaching job, submitting numerous papers to academic journals (as well as a whole doctoral dissertation) while working at a patent office without a university affiliation. An editor for today’s Annalen der Physik (which published the young Einstein in 1901) would toss the theory of special relativity out before it even made it to Reviewer Number 2. Can you imagine that same kid taking an unsalaried lectureship after submitting risky, but groundbreaking papers on special relativity, Brownian motion and the photoelectric effect? Einstein wasn’t even at the level of an adjunct, but he continued to try and refute his own theory, leading to the theory of general relativity. Today’s careerist tenure-track system might have had Einstein saving his most risky theories for much later, and possibly never seeing the light of day.

Einstein is lauded for his discoveries, but like many incredible scientists, people overlook the significant risk he took in not only exposing his theories to refutation but actively trying to blow them up. Another scientist interested in blowing things up, Alfred Nobel, the inventor of dynamite, established a prize for significant contributions to human knowledge in 1895. The recipients of this prize are considered heroes, but sadly, these heroes’ productivity declines after receiving this great honor. As you might guess, Popper wasn’t a fan of putting heroes on a pedestal, but he most admired iconoclast scientists like Einstein, Newton, Kepler and even pre-Socratic thinkers like Anaximenes and Thales, who Popper credits as willing to challenge their own theories as well as those of their heroes. Popper might have favored an anti-Nobel prize, for scientists who refute their most precious theories.

Far from perfect, Popper’s idea of falsification sparked serious debate over more than white swans, yet his legacy lives on owing to his willingness to confront uncertainty and the possibility of his failure. Popper has been criticized for not acknowledging the value of inductive methods, but he did advocate for critically evaluating empirical evidence to drive progress rather than a total overhaul. To this effect, he might agree that we need not entirely dismantle our scientific institutions, but to tweak them. If we want to leave a legacy of advancing knowledge, we must embrace uncertainty and the possibility that the current approach may be flawed.

While science projects an illusion of certainty to outsiders, insiders recognize that we only get closer to the truth when we accept uncertainty and risk failure. If we hope to engineer a flourishing open society built on scientific progress, we need more than the false certainty obtained from only tolerating positive results. We must embrace failure by incentivizing our scientists to make bold conjectures coupled tightly with rigorous refutation, over building safe careers and temporary stacks of publications. And we need to embrace Popper’s humble motto: “I may be wrong and you may be right, and, by an effort, we may get nearer the truth.”