How To Get the Future We Were Promised

In ‘Where Is My Flying Car?,’ J. Storrs Hall claims government regulation, not technological incapacity, is holding us back

By Adam Thierer

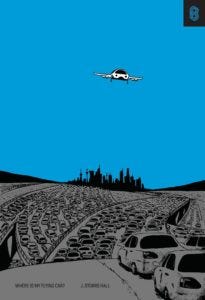

Where is “The Jetsons” future we were promised—the one with robot maids, abundant power and flying cars? In his new book, “Where Is My Flying Car?,” scientist J. Storrs Hall provides an answer that won’t sit well with many: That future was within our grasp, but it was stolen away from us.

Hall’s title serves as the launching point for a robust investigation into a broader, more critical question: Why have technological advancements slowed down in important sectors such as transportation, nanotechnology and energy? What held back progress? The problem, he argues, is anti-technological thinking and the overregulation that accompanies it. It comes down to a progress-restricting cocktail of negative cultural attitudes toward innovation, incumbent companies or academics looking to protect their turf, litigation-happy trial lawyers and a raft of risk-averse laws and regulations.

Over the past three years, buzz has grown gradually about Hall’s book, which first appeared as a Kindle book in 2018. A beautiful hardbound version of the book was recently published by Stripe Press. Hopefully, that will give it the attention it deserves because “Where Is My Flying Car?” is the most important book on technology policy written in the past quarter century. Hall has perfectly defined what is at stake if we fail to embrace a pro-progress policy vision going forward.

How to Kill Innovation Culture: The Machiavelli Effect

“Where Is My Flying Car?” is packed with meticulously detailed, but entirely comprehensible, explanations of highly technical matters, including the nuts and bolts of nanotech, the fundamentals of nuclear fusion and everything you ever wanted to know about planes, helicopters and gyros—including how to both build and pilot them. Hall goes to great lengths to show that we already possess the know-how to create and benefit from those technologies. “The reason we don’t have flying cars today isn’t technological feasibility,” he says. “We have had the means to build, manufacture, and improve flying cars for the better part of a century.” The same is true for robotics, nanotech and especially nuclear technology.

But something went horribly wrong beginning around the late 1960s and early 1970s that slowed progress in these fields. Hall documents an important shift in public and political attitudes during this period. While societal attitudes in the early 20th century reflected general excitement about new innovations—or at least an openness to them—by the 1960s, Hall says, we began witnessing growing “susceptibility to baseless horror stories and hostility to technology, and particularly to energy.”

This shift in thinking was accompanied by a flood of new federal agencies and rules, as well as the beginning of what economists refer to as “the Great Stagnation,” the slowdown in innovation and productivity enhancements that continues to present day. While scholars debate its severity and causes, most generally agree that a productivity slowdown began around the 1960s. Many of them also agree with Hall about how “the strangling red tape of regulation” probably had something to do with it. “Over the long run, unchecked regulation destroys the learning curve, prevents innovation, protects and preserves inefficiency, and makes progress run backward,” Hall argues. “Looked at under the hood, the Great Stagnation was really the Great Strangulation.”

But what precisely happened to America’s innovation culture that spawned this flood of new regulations? Hall coins the term “the Machiavelli Effect” to identify the culprits. He builds on this passage from Niccolò Machiavelli’s classic 1532 study of political power, “The Prince”:

[I]t ought to be remembered that there is nothing more difficult to take in hand, more perilous to conduct, or more uncertain in its success, then to take the lead in the introduction of a new order of things. Because the innovator has for enemies all those who have done well under the old conditions, and lukewarm defenders in those who may do well under the new. This coolness arises partly from fear of the opponents, who have the laws on their side, and partly from the incredulity of men, who do not readily believe in new things until they have had a long experience of them. Thus it happens that whenever those who are hostile have the opportunity to attack they do it like partisans, whilst the others defend lukewarmly, in such wise that the prince is endangered along with them.

Hall notes that the Machiavelli Effect “has nothing to do with any conspiracy.” Rather, it comes down to human nature: Many people simultaneously fear the new and different, and they also want to protect whatever status quo they benefit from (or at least feel comfortable with).

Hall admits he is not the first to identify the Machiavelli Effect. He notes that Isaac Asimov pinpointed the same trend when he discussed in a 1974 lecture how there had been “bitter, exaggerated, last-stitch resistance . . . to every significant technological change that had taken place on earth.” While the opponents of change typically insisted that “[i]t was always the good of humanity that rested upon their hearts,” in reality, Asimov noted, “the resistance came from those groups who stood to lose influence, status, money . . . as a result of the change.”

The Machiavelli Effect, then, seems like the normal course of things. Opposition to change is part of a familiar recurring cycle of initial resistance, gradual adaptation and then eventual assimilation into society. Unfortunately, however, some technologies never get past the resistance stage because negative societal attitudes get translated into restrictive policy defaults that flatly prohibit progress in certain fields.

How the Precautionary Principle Clobbers the Learning Curve

This is essentially what happened in America over the past half century as “regulation clobbered the learning curve” for nuclear, nanotech and aviation technologies, Hall argues. The learning curve for any sector or technology is a measure of how fast we can learn from mistakes, improve productivity and lower costs. The precise way that regulation undermines the learning curve is by setting policy defaults to no trial and error as opposed to free to experiment. This is the difference between the precautionary principle and permissionless innovation.

In my 2016 book on the clash between these two governing philosophies, I noted how the precautionary principle generally curtails or disallows innovations until developers can prove that they will not cause any harm to individuals, groups, specific entities or various existing laws, norms or traditions. By contrast, permissionless innovation means that experimentation with new technologies and business models should generally be allowed by default. The precautionary principle gives innovators a policy red light, stopping or greatly limiting experimentation through prior restraints. Permissionless innovation is a policy green light, giving innovators a chance to experiment. If problems develop, it relies on more flexible ex post solutions.

The fundamental problem with the precautionary principle, as I argued in my book, is that “living in constant fear of worst-case scenarios—and premising public policy on them—means that best-case scenarios will never come about.” If societal attitudes and public policy will not tolerate the idea of any error resulting from experimentation with new and better ways of doing things, then we will obviously not get many new and better things! Hall notes how Wilbur Wright captured this sentiment brilliantly when he said, “If you are looking for perfect safety, you would do well to sit on a fence and watch the birds.” Of course, humans would have never taken to the skies if the Wright brothers had not gotten off the fence and taken the risks they did. There can be no reward without some risk.

A risk pays off. The Wright brothers' experimental flight in 1903 revolutionized the way humans travel. Image Credit: John T. Daniels/Library of Congress via Wikimedia Commons

It is surprising that Hall does not spend more time discussing the precautionary principle by name. Nonetheless, he perfectly diagnoses how risk aversion and anti-technological hysteria have motivated the repressive regulatory policies of the past 50 years in sectors where we most needed profound jumps up the learning curve. Nuclear power is the most galling example because we had already come so far. “The low-hanging fruit of nuclear power was left to rot on the ground, and it was sprayed with the kerosene of hysteria and ignorance,” Hall says. After the Nuclear Regulatory Commission (NRC) was formed in 1975, for example, new plant licensing promptly plummeted. Just recently, after a two-year wait, the NRC denied an application to build a compact fast reactor in Idaho, continuing its long history of making innovations in this space nearly impossible.

In the wake of the March 2011 Fukushima nuclear accident in Japan, governments across the globe have moved even faster to dismantle nuclear technology. Most notably, Germany has moved to phase out half its nuclear plants even though an energy shortage haunts Europe. And in the U.S., no new plants are being built, and older ones are being gradually decommissioned due to either age or regulatory opposition.

The consequences will be disastrous. Following Japan’s decision to halt nuclear power, the country went from 30% nuclear power production to 0% in just 14 months. A 2019 NBER study entitled “Be Cautious With the Precautionary Principle: Evidence from Fukushima Daiichi Nuclear Accident” found that Japan needed to shift back to fossil fuels to offset that shortfall. That policy shift resulted in rapid hikes in electricity prices and 1,280 fatalities from cold-weather-related problems. “This suggests that ceasing nuclear energy production has contributed to more deaths than the accident itself,” the authors concluded, since “[n]o deaths have yet to be directly attributable to radiation exposure” from the accident.

Moreover, the shift back to fossil fuels has obvious implications for ongoing efforts to address climate change. The International Energy Agency recently reported that coal-fired power generation reached an all-time high in 2021. Thus, the precautionary principle undermines not just innovation, but also the very public health and safety it is nominally meant to support.

Talent Shift

Hall argues the post-1970 flood of new technological regulations had not only direct costs that were quite visible (less innovation, denied choice, higher prices, lower quality), but also equally significant indirect costs in the form of a misdirected allocation of societal resources and talent. He bemoans the negative consequences of “taking more than a million of the country’s most talented and motivated people and putting them to work making arguments and filing briefs instead of inventing, developing, and manufacturing.”

Hall notes how, following the sudden change in public attitudes and government regulation surrounding nuclear power in the late 1960s and early 1970s, “interests, and career prospects, in nuclear physics imploded” and “major discoveries stopped coming.” Perhaps unsurprisingly, enrollment in law schools skyrocketed during that same period. Not everyone who ended up going to law school would have become a physicist, of course. Still, many people got the message: Shift from the hard to the soft sciences.

This sort of mass talent migration has consequences. “We poured increasing torrents of money into the ivory tower and virtue signaling, and it increasingly took our best and brightest away from improving our lives.” For the brave souls who still hope to make a career as nuclear physicists, Hall warns that they should be prepared to be “misconstrued and misrepresented by activists, demonized by ignorant journalists, and strangled by regulation.”

Not all engineering sectors suffered the same fate. Most notably, a huge number of people flocked to the information technology (IT) sector. But this is telling. One of the reasons that IT became a more attractive field to many workers, entrepreneurs and investors was because it enjoyed the green light of permissionless innovation as its policy default. The result was an explosion of innovative activity and America’s dominance in global digital technology.

Problems with Government Science Funding

Hall’s indictment of modern innovation policy does not end with regulatory encumbrances. He is equally critical of government research and development (R&D) efforts. “One of the great tragedies of the latter 20th century, and clearly one of the causes of the Great Stagnation,” he argues, “was the increasing centralization and bureaucratization of science and research funding.”

Hall identifies two problems with over-centralized government funding of science: It can lead to a stodgy, insular system in which the old guard protects its own interests, and it can also crowd out private-sector funding efforts. He says “ivory-tower syndrome”—a closed-mindedness and protectionist instinct among those who benefit from a well-settled status quo—has crept into federal R&D programs and agency funding processes. “Centralized funding of an intellectual elite makes it easier for cadres, cliques, and the politically skilled to gain control of a field, and by their nature they are resistant to new, outside, non-Ptolemaic ideas.” He is particularly critical of how this don’t-rock-the-boat mentality has set back research on cold fusion, where the old guard threw up roadblocks to potentially important research. “The ivory tower has a moat full of crocodiles,” he says, and “we increasingly have major social institutions whose prestige comes in substantial part from virtue signaling rather than actually producing useful results.”

Worse yet, Hall says, is the crowding-out effect created by federal R&D programs. To those who claim massive government R&D is needed to spur innovation and growth, he says “the evidence simply does not support the conclusion.” Hall points out that “the great innovations that made the major quality-of-life improvements came before 1960 . . . and they were all developed privately.” He recites a long list of such inventions: refrigeration, vacuum cleaners, gas and electric stoves, fertilizer and almost every media and communications technology.

He notes that the Great Stagnation has coincided with a massive increase in federal science funding, and that the most explosive periods of innovation and growth in both the U.S. and Britain came when the countries “were squarely in laissez-faire mode.” The issues of over-centralized science bureaucracy and turf-protecting constituencies further exacerbate the crowding-out problem.

It is hard to see how this problem will be reversed with industrial policy experiencing a major resurgence in the U.S. and Congress considering a 2,300-page, $250 billion, pork-laden Innovation and Competition Act. Despite all the evidence supporting Hall’s indictment of past government support measures, the tech pork barrel appears ready to roll on.

Reclaiming a Culture of Experimentation

How do we get progress back on track and reclaim the future we should have already had? To begin, “We need a new philosophy of progress,” argues Jason Crawford, who started the nonprofit Roots of Progress to advance that cause. “We live in an age that has lost its optimism” about the prospects for improvement, he argues.

Toward that end, it would help if more innovators, entrepreneurs and scientists stepped up to make the case for progress. “Where Is My Flying Car?” makes a vital contribution to this mission. Hall’s book is probably the most full-throated and rigorous defense of innovation by a trained scientist or engineer since Samuel C. Florman’s “The Existential Pleasures of Engineering” was first published in 1976. The Wall Street Journal called Florman’s book a “hymn to homo faber,” which is an equally fitting ode to Hall’s book as a celebration of the potential for humanity to build more and better tools to improve the world.

Crucially, Florman and Hall make the moral case for progress. It’s the same moral case made in books by Virginia Postrel (“The Future and Its Enemies”), Steven Pinker “Enlightenment Now”), Matt Ridley (“How Innovation Works”) and Deirdre McCloskey in her three-volume trilogy about the history of modern economic growth. These scholars argue that there is a symbiotic relationship between innovation, economic growth, pluralism and human betterment, and that to deny people the ability to improve their lot in life is fundamentally anti-human. “The zero-sum society is a recipe for evil,” Hall says, because it leaves us with a “static level of existence” that denies us the ability to dramatically improve the human condition.

Hall’s additional contribution to this effort is to clarify that, when thinking about how we move the needle on progress, incrementalism just won’t cut it. We need big, paradigm-busting innovations, not just tweaks on yesterday’s successes. We have already witnessed multiple paradigm shifts in the IT sector over the past 30 years because it has been governed by a more flexible, pro-experimentation policy framework. Why can’t the policy vision we have for digital bits be the same as the one for physical atoms?

If we hope to expand our technological horizons and improve the welfare of all humans on the planet, we need to fundamentally rethink public policies toward innovation. We need to start building again. Unfortunately, columnist Ezra Klein notes that “the institutions through which Americans build have become biased against action rather than toward it.” Similarly, The Atlantic’s Derek Thompson argues that the U.S. desperately needs “a revitalized culture of experimentation” that it has lost. They’re both right, but we’ll never be able to start building things again if our nation’s innovation policy culture discourages experimenting and building by keeping the precautionary principle as its default for many of our most important sectors.

Accordingly, the age of risk-averse, “set it and forget it” government administration must end. The costs are too high. The easiest way to clear out the progress-retarding regulatory deadwood is to start sunsetting large portions of the U.S. Code of Regulations in a systematic fashion, thus forcing a regular reevaluation of the laws governing technology sectors. Going forward, regulation should be focused on addressing real problems in a reasonable fashion. Just preserving archaic tomes of rules from bygone technological eras doesn’t help anyone—except those protected from competition by such rules.

In sum, Hall says, we need to push for “a world of makers instead of takers.” We need to focus on the benefits of “the dynamic society” and end the long reign of stasis-minded thinking that has dominated over the past half century and given us the Great Stagnation. Our future is still there for the taking. Hall has given us the road map to get back on track.